文章目录

- Hive 引擎简介

- 环境配置 (ssh已经搭好)

- JDK准备

- Hadoop 准备

- 配置集群

- 启动集群

- LZO压缩配置

- Hive 准备

- Hive 元数据配置到 MySQL

- Spark 准备

- Hive on Spark 配置

- Hive on Spark测试

- Spark on yarn & spark on hive配置

Hive 引擎简介

Hive 引擎包括:默认 MR、tez、spark

最底层的引擎就是MR (Mapreduce)无需配置,Hive运行自带

Hive on Spark:Hive 既作为存储元数据又负责 SQL 的解析优化,语法是 HQL 语法,执行引擎变成了 Spark,Spark 负责采用 RDD 执行。

Spark on Hive : Hive 只作为存储元数据,Spark 负责 SQL 解析优化,语法是 Spark SQL语法,Spark 负责采用 RDD 执行。

环境配置 (ssh已经搭好)

- Java 1.8.0+

- Hadoop 2.7.0

- MySQL

- Hive 3.1.2

- Spark 2.3.0

为了方便只用单台虚拟机去跑,多台和单台一个套路,分发即可

JDK准备

1)卸载现有JDK

sudo rpm -qa | grep -i java | xargs -n1 sudo rpm -e --nodeps

2)解压JDK到/opt/module目录下

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/

3)配置JDK环境变量

1)进入 /etc/profile

添加如下内容,然后保存(:wq)退出

#JAVA_HOME export JAVA_HOME=/opt/module/jdk1.8.0_212 export PATH=$PATH:$JAVA_HOME/bin

2)让环境变量生效

source /etc/profile

3)测试JDK是否安装成功

java -version

Hadoop 准备

部署

1)进入到Hadoop安装包路径下

cd /opt/software/

2)解压安装文件到/opt/module下面

https://hadoop.apache.org/release/2.7.0.html

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.7.0/hadoop-2.7.0.tar.gz tar -zxvf hadoop-2.7.0.tar.gz -C /opt/module/

3)将Hadoop添加到环境变量

1)获取Hadoop安装路径

/opt/module/hadoop-2.7.0

(2)打开/etc/profile文件

sudo vim /etc/profile 在profile文件末尾添加JDK路径:(shitf+g) #HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-2.7.0 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

(3)

source /etc/profile

配置集群

1)核心配置文件

配置core-site.xml (hadoop-2.7.0/etc/hadoop/core-site.xml )

fs.defaultFS hdfs://192.168.1.250:8020 hadoop.tmp.dir /mnt/data_online/hadoop-data hadoop.http.staticuser.user atomecho hadoop.proxyuser.atomecho.hosts * hadoop.proxyuser.atomecho.groups * hadoop.proxyuser.atomecho.groups * io.compression.codecs org.apache.hadoop.io.compress.GzipCodec, org.apache.hadoop.io.compress.DefaultCodec, org.apache.hadoop.io.compress.BZip2Codec, org.apache.hadoop.io.compress.SnappyCodec, com.hadoop.compression.lzo.LzoCodec, com.hadoop.compression.lzo.LzopCodec io.compression.codec.lzo.class com.hadoop.compression.lzo.LzoCodec 2)HDFS配置文件

配置hdfs-site.xml

dfs.namenode.http-address 192.168.1.250:9870 dfs.namenode.secondary.http-address 192.168.1.250:9868 dfs.replication 1 3)YARN配置文件

配置yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle yarn.resourcemanager.hostname 192.168.1.250 yarn.nodemanager.env-whitelist JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME yarn.scheduler.minimum-allocation-mb 512 yarn.scheduler.maximum-allocation-mb 12288 yarn.nodemanager.resource.memory-mb 12288 yarn.nodemanager.pmem-check-enabled false yarn.nodemanager.vmem-check-enabled false yarn.log-aggregation-enable true yarn.log.server.url http://192.168.1.250:19888/jobhistory/logs yarn.log-aggregation.retain-seconds 604800 4)MapReduce配置文件

配置mapred-site.xml

mapreduce.framework.name yarn mapreduce.jobhistory.address 192.168.1.250:10020 mapreduce.jobhistory.webapp.address 192.168.1.250:19888 5)配置workers

192.168.1.250

6)配置hadoop-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_212

启动集群

(1)如果集群是第一次启动,需要在192.168.1.250节点格式化NameNode(注意格式化之前,一定要先停止上次启动的所有namenode和datanode进程,然后再删除data和log数据)

bin/hdfs namenode -format

(2)启动HDFS

sbin/start-dfs.sh

(3)在配置了ResourceManager的节点启动YARN

sbin/start-yarn.sh

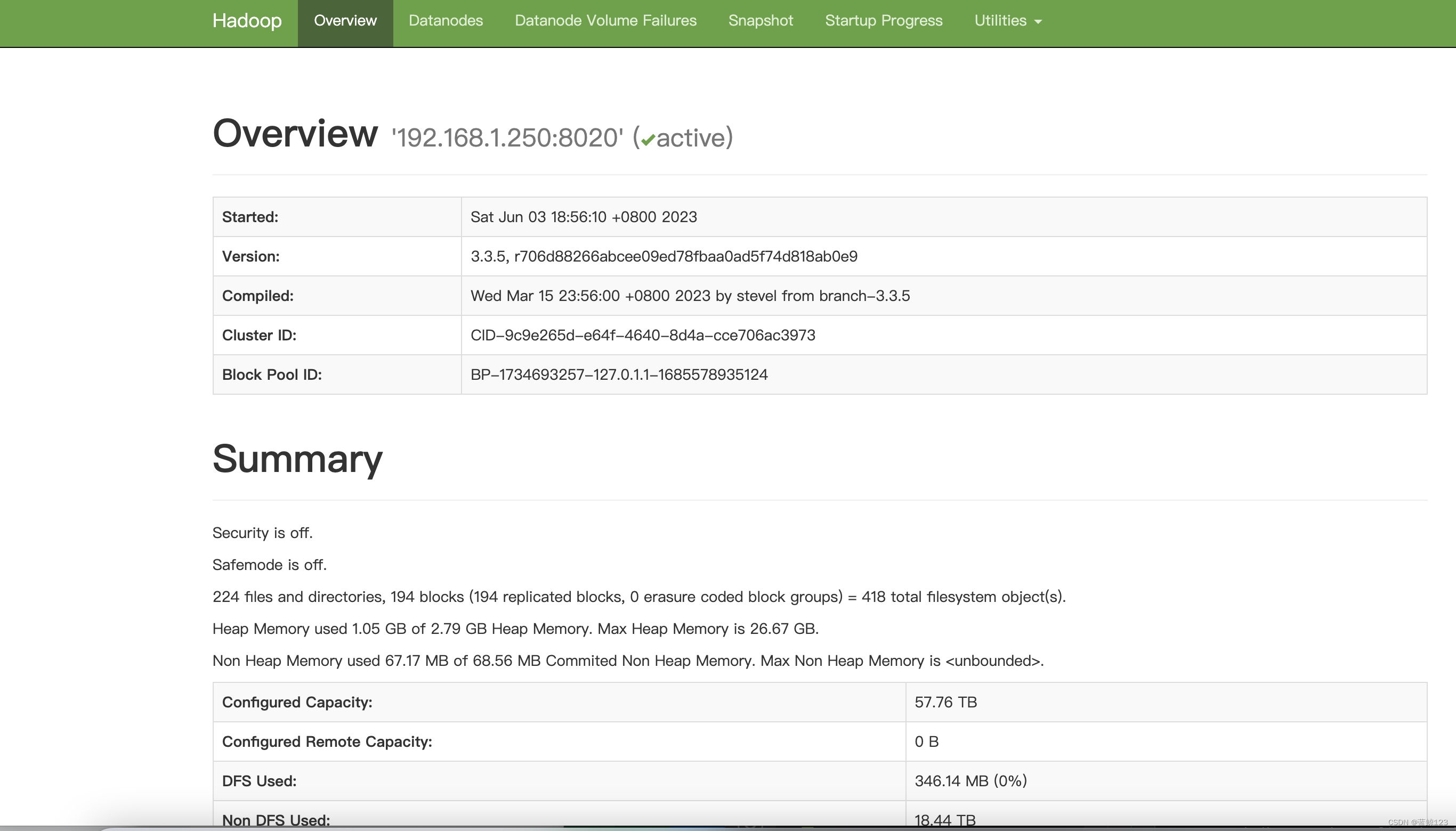

(4)Web端查看HDFS的Web页面:http://192.168.1.250:9870

(5)Web端查看SecondaryNameNode : http://192.168.1.250:9868/status.html (单机模式下面什么都没有)

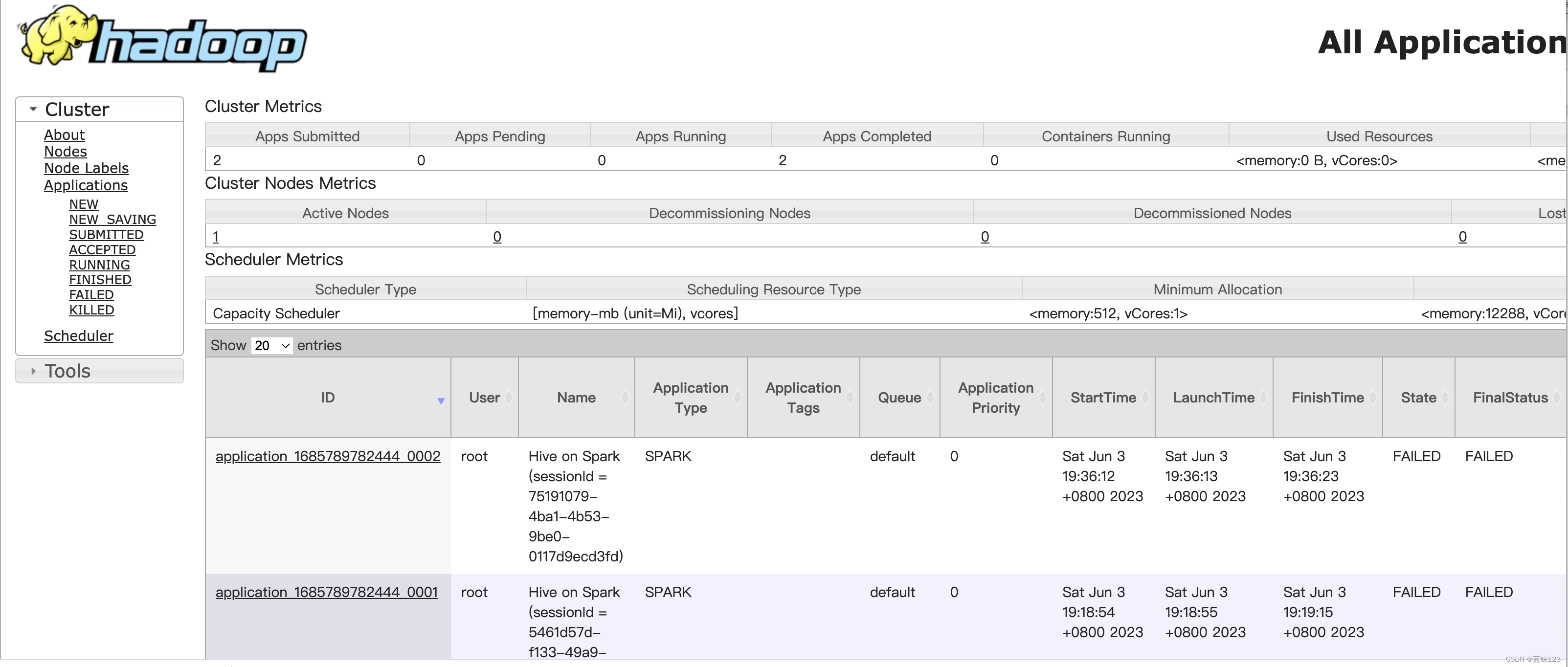

6)Web端查看ResourceManager : http://192.168.1.250:8088/cluster

LZO压缩配置

- 编译hadoop-lzo

wget https://www.oberhumer.com/opensource/lzo/download/lzo-2.10.tar.gz tar -zxvf lzo-2.10.tar.gz cd lzo-2.10 ./configure --enable-shared --prefix /usr/local/lzo-2.10 make && sudo make install # build hadoop-lzo C_INCLUDE_PATH=/usr/local/lzo-2.10/include \ LIBRARY_PATH=/usr/local/lzo-2.10/lib \ mvn clean package

2)将编译好后的 hadoop-lzo-0.4.20.jar 放入 /opt/module/hadoop-2.7.0/share/hadoop/common/

$ pwd /opt/module/hadoop-2.7.0/share/hadoop/common/ $ ls hadoop-lzo-0.4.20.jar

2)core-site.xml 增加配置支持 LZO 压缩

io.compression.codecs org.apache.hadoop.io.compress.GzipCodec, org.apache.hadoop.io.compress.DefaultCodec, org.apache.hadoop.io.compress.BZip2Codec, org.apache.hadoop.io.compress.SnappyCodec, com.hadoop.compression.lzo.LzoCodec, com.hadoop.compression.lzo.LzopCodec io.compression.codec.lzo.class com.hadoop.compression.lzo.LzoCodec Hadoop 2.x 端口号 总结

MySQL准备

安装mysql

1)进入msyql 库

mysql> use mysql

2)查询 user 表

mysql> select user, host from user;

3)修改 user 表,把 Host 表内容修改为%

mysql> update user set host="%" where user="root";

4)刷新

mysql> flush privileges;

Hive 准备

下载hive:https://dlcdn.apache.org/hive/

1)把 apache-hive-3.1.2-bin.tar.gz上传到 linux 的/opt/software 目录下

2)解压 apache-hive-3.1.2-bin.tar.gz 到/opt/module目录下面

tar -zxvf /opt/software/apache-hive-3.1.2-bin.tar.gz -C /opt/module/

3)修改 apache-hive-3.1.2-bin.tar.gz 的名称为 hive

mv /opt/module/apache-hive-3.1.2-bin/ /opt/module/hive

4)修改/etc/profile,添加环境变量

sudo vim /etc/profile 添加内容 #HIVE_HOME export HIVE_HOME=/opt/module/hive export PATH=$PATH:$HIVE_HOME/bin

source 一下 /etc/profile 文件,使环境变量生效

source /etc/profile

Hive 元数据配置到 MySQL

- 拷贝驱动

下载mysql JDBC: https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.30/

将 MySQL 的 JDBC 驱动拷贝到 Hive 的 lib 目录下

cp /opt/software/mysql-connector-j-8.0.33.jar /opt/module/hive/lib/

- 配置 Metastore 到 MySQL

在$HIVE_HOME/conf 目录下新建 hive-site.xml 文件

vim hive-site.xml

添加如下内容

javax.jdo.option.ConnectionURL jdbc:mysql://192.168.1.249:3306/metastore?useSSL=false javax.jdo.option.ConnectionDriverName com.mysql.jdbc.Driver javax.jdo.option.ConnectionUserName root javax.jdo.option.ConnectionPassword Lettcue2kg hive.metastore.warehouse.dir /user/hive/warehouse hive.metastore.schema.verification false hive.server2.thrift.port 10000 hive.server2.thrift.bind.host 192.168.1.249 hive.metastore.event.db.notification.api.auth false hive.cli.print.header true hive.cli.print.current.db true spark.yarn.jars hdfs://192.168.1.250:8020/spark-jars/* hive.execution.engine spark hive.spark.client.connect.timeout 10000ms - 启动 Hive

初始化元数据库

1)登陆MySQL

mysql -uroot -p

2)新建 Hive 元数据库

mysql> create database metastore; mysql> quit;

3)初始化 Hive 元数据库

schematool -initSchema -dbType mysql -verbose

- 启动 hive 客户端

1)启动 Hive 客户端

bin/hive

2)查看一下数据库

hive (default)> show databases; OK database_name default

Spark 准备

(1)Spark 官网下载 jar 包地址:

http://spark.apache.org/downloads.html

(2)上传并解压解压 spark-2.3.0-bin-hadoop2.7.tgz

wget https://archive.apache.org/dist/spark/spark-2.3.0/spark-2.3.0-bin-hadoop2.7.tgz tar -zxvf spark-2.3.0-bin-hadoop2.7.tgz -C /opt/module/ mv /opt/module/spark-2.3.0-bin-hadoop2.7 /opt/module/spark

(3)配置 SPARK_HOME 环境变量

sudo vim /etc/profile 添加如下内容 # SPARK_HOME export SPARK_HOME=/opt/module/spark export PATH=$PATH:$SPARK_HOME/bin

source 使其生效

source /etc/profile

(4)在hive 中创建 spark 配置文件

vim /opt/module/hive/conf/spark-defaults.conf 添加如下内容(在执行任务时,会根据如下参数执行) spark.master yarn spark.eventLog.enabled true spark.eventLog.dir hdfs://192.168.1.250:8020/spark-history spark.executor.memory 2g spark.driver.memory 1g

在 HDFS 创建如下路径,用于存储历史日志

hadoop fs -mkdir /spark-history

(5)向 HDFS 上传 Spark 纯净版 jar 包

上传并解压 spark-2.3.0-bin-without-hadoop.tgz

tar -zxvf /opt/software/spark-2.3.0-bin-without-hadoop.tgz

6)上传 Spark 纯净版 jar 包到 HDFS

hadoop fs -mkdir /spark-jars hadoop fs -put spark-2.3.0-bin-without-hadoop/jars/* /spark-jars

Hive on Spark 配置

修改 hive-site.xml 文件

vim /opt/module/hive/conf/hive-site.xml 添加如下内容

spark.yarn.jars hdfs://192.168.1.250:8020/spark-jars/* hive.execution.engine spark hive.spark.client.connect.timeout 10000ms 1)兼容性说明

注意:官网下载的 Hive3.1.2 和 Spark3.0.0 默认是不兼容的。因为 Hive3.1.2 支持的 Spark版本是 2.4.5,所以需要我们重新编译 Hive3.1.2 版本。

编译步骤:官网下载 Hive3.1.2 源码,修改 pom 文件中引用的 Spark 版本为 3.0.0,如果编译通过,直接打包获取 jar 包。如果报错,就根据提示,修改相关方法,直到不报错,打包获取 jar 包。

Hive on Spark测试

1)启动 hive 客户端

bin/hive

(2)创建一张测试表

hive (default)> create table huanhuan(id int, name string);

hive (default)> show tables; OK tab_name huanhuan Time taken: 0.117 seconds, Fetched: 1 row(s)

3)通过 insert 测试效果

hive (default)> insert into huanhuan values(1,'haoge'); Query ID = root_20230604114221_a1118af6-6182-455b-80fa-308382ddbee0 Total jobs = 1 Launching Job 1 out of 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers: set hive.exec.reducers.max= In order to set a constant number of reducers: set mapreduce.job.reduces= Running with YARN Application = application_1685849514092_0001 Kill Command = /opt/module/hadoop-2.7.0/bin/yarn application -kill application_1685849514092_0001 Hive on Spark Session Web UI URL: http://192.168.1.250:43725 Query Hive on Spark job[0] stages: [0, 1] Spark job[0] status = RUNNING -------------------------------------------------------------------------------------- STAGES ATTEMPT STATUS TOTAL COMPLETED RUNNING PENDING FAILED -------------------------------------------------------------------------------------- Stage-0 ........ 0 FINISHED 1 1 0 0 0 Stage-1 ........ 0 FINISHED 1 1 0 0 0 -------------------------------------------------------------------------------------- STAGES: 02/02 [==========================>>] 100% ELAPSED TIME: 23.28 s -------------------------------------------------------------------------------------- Spark job[0] finished successfully in 23.28 second(s) Loading data to table default.huanhuan OK col1 col2 Time taken: 98.25 seconds Spark on yarn & spark on hive配置

- 编辑 SPARK_HOME/conf/spark-defaults.conf

spark.master yarn spark.driver.memory 512m spark.yarn.am.memory 512m spark.executor.memory 512m # 配置spark日志 spark.eventLog.enabled true spark.eventLog.dir hdfs://192.168.1.250:8020/spark-logs spark.history.provider org.apache.spark.deploy.history.FsHistoryProvider spark.history.fs.logDirectory hdfs://192.168.1.250:8020/spark-logs spark.history.fs.update.interval 10s spark.history.ui.port 18080

- 编辑/etc/profile

export HADOOP_HOME=/opt/module/hadoop-2.7.0 export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native:$LD_LIBRARY_PATH

- source/etc/profile

source /etc/profile

- 拷贝hadoop和hive的配置到spark

把hadoop下的

core-site.xml

hdfs-site.xml

拷贝到 SPARK_HOME/conf/

hive下的配置

hive-site.xml

拷贝到 SPARK_HOME/conf/

- 拷贝mysql的驱动

cp mysql-connector-java-5.1.38-bin.jar $SPARK_HOME/jars/

例子

main.py

import pyspark from pyspark.sql import SparkSession from pyspark.sql import Row spark = SparkSession.builder.enableHiveSupport().getOrCreate() df=spark.sql("show databases") df.show() # https://www.projectpro.io/recipes/write-csv-data-table-hive-pyspark提交脚本

submit.sh

SPARK_PATH=/opt/module/spark YARN_QUEUE=default # DEPLOY_MODE=cluster DEPLOY_MODE=client ${SPARK_PATH}/bin/spark-submit \ --master yarn \ --name "spark_demo_lr" \ --queue ${YARN_QUEUE} \ --deploy-mode ${DEPLOY_MODE} \ --driver-memory 4g \ --driver-cores 2 \ --executor-memory 4g \ --executor-cores 2 \ --num-executors 2 \ --conf spark.default.parallelism=10 \ --conf spark.executor.memoryOverhead=2g \ --conf spark.driver.memoryOverhead=1g \ --conf spark.yarn.maxAppAttempts=1 \ --conf spark.yarn.submit.waitAppCompletion=true \ ./main.py

- 启动 hive 客户端

- 启动 Hive

- 配置 Metastore 到 MySQL

猜你喜欢

网友评论

- 搜索

- 最新文章

- 热门文章