一、爬虫爬取的招聘网站数据

二、在MySQL中创建空表

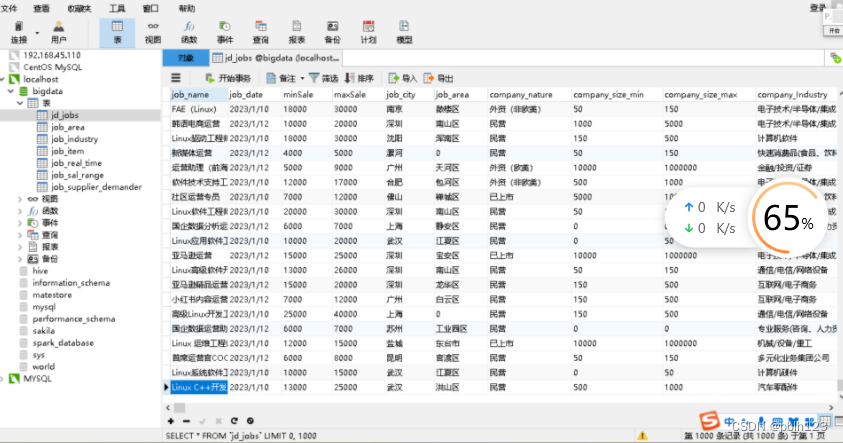

SET FOREIGN_KEY_CHECKS=0; -- ---------------------------- -- Table structure for jd_jobs -- ---------------------------- DROP TABLE IF EXISTS `jd_jobs`; CREATE TABLE `jd_jobs` ( `job_name` text, `job_date` text, `minSale` text, `maxSale` text, `job_city` text, `job_area` text, `company_nature` text, `company_size_min` text, `company_size_max` text, `company_Industry` text ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

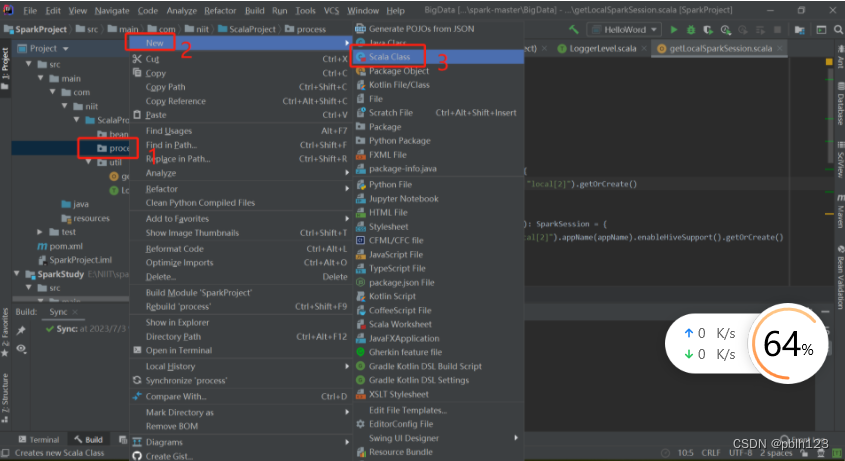

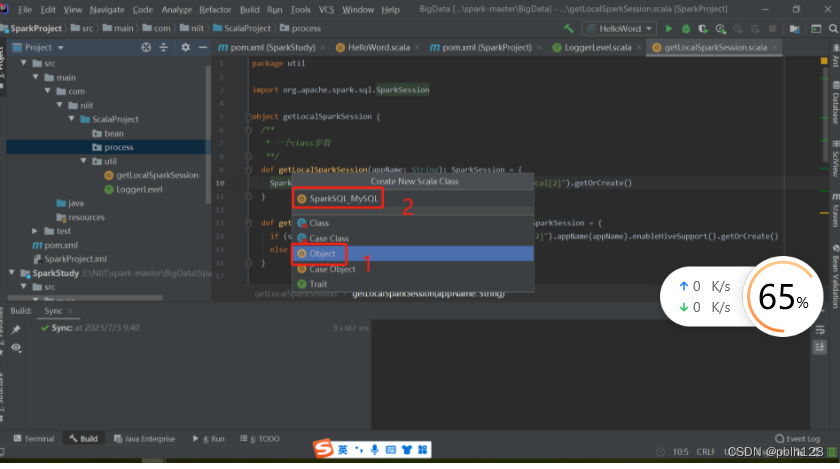

三、通过Spark导入招聘数据到MySQL中

以下是完整代码:

import java.util.Properties

import com.niit.util.{LoggerLevel, SparkUnit}

object SparkSQL_MySQL extends LoggerLevel {

def main(args: Array[String]): Unit = {

/**

* 读取数据,写入MySQL

*

* 实现:

* 1. 读取文件

* 2. 转为table操作对象

* 3. 写SQL查询

* 4. 保存查询SQL的对象

**/

val ss = SparkUnit.getLocalSparkSession("OffLine")

// 读取两个文件

val path = "D:\temp\"

val jd_jobs = ss.read

.option("header", false)

.option("encoding","gbk")

.option("delimiter", ",")

.csv(path + "51_jobs_data.csv").toDF("job_name", "job_date", "minSale","maxSale","job_city","job_area","company_nature","company_size_min","company_size_max","company_Industry")

jd_jobs.show()

// SQL式编程需要转为table结构

jd_jobs.createTempView("jd_jobs")

// select

val jobs = ss.sql(

"""

| SELECT job_name, job_date, minSale, maxSale,job_city,job_area,

| company_nature,company_size_min,company_size_max,company_Industry

| from jd_jobs

|""".stripMargin)

// JDBC

val url = "jdbc:mysql://localhost:3306/bigdata?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull&serverTimezone=UTC"

val tb = "jd_jobs"

val properties = new Properties()

properties.setProperty("user", "root")

properties.setProperty("password", "123456")

properties.setProperty("driverClass", "com.mysql.jdbc.Driver")

// save

jobs.write.mode("overwrite").jdbc(url, tb, properties)

// tips :除了查询SQL返回对象,然后保存对象

// 还可以直接create table as select ... 一步到位

}

}

四、导入MySQL

猜你喜欢

网友评论

- 搜索

- 最新文章

- 热门文章