目录

1.前期准备

1.1.hadoop-3.1.3.tar.gz,jdk-8u212-linux-x64.tar.gz,apache-zookeeper-3.5.7-bin.tar.gz三个包提取码:k5y6

2.解压安装包,配置环境变量

3. 将三个节点分别命名为master、slave1、slave2并做免密登录

免密在前面Hadoop完全分布式搭建说过,这里不再赘述

4.搭建zookeeper集群

根据配置的路径新建zkdata,zkdatalog目录。然后到zkdata目录中可以touch新建一个文件myid,也可以直接echo写入为1,另外slave1,salve2分别对应2,3。编辑

5.分发解压后的java,/etc/profile,zookeeper修改myid为2,3

6.启动zookeeper

查看状态

vim core-site.xml

vim hdfs-site.xml

vim yarn-site.xml

其余几个配置和前面Hadoop完全分布式一样

6.分发Hadoop

7.首次启动HDFS的HA模式,步骤如下

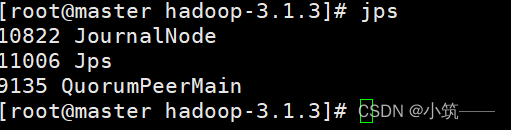

7.1.在虚拟机master上启动zookeeper集群

7.2.在虚拟机master上格式化zookeeper

7.3.分别在虚拟机master,slave1,slave2上启动journalnode进程

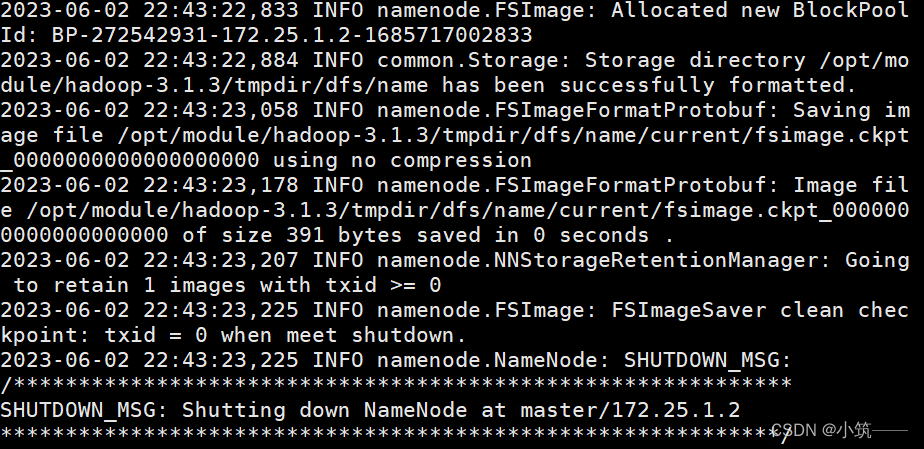

7.4.然后格式化

7.5.

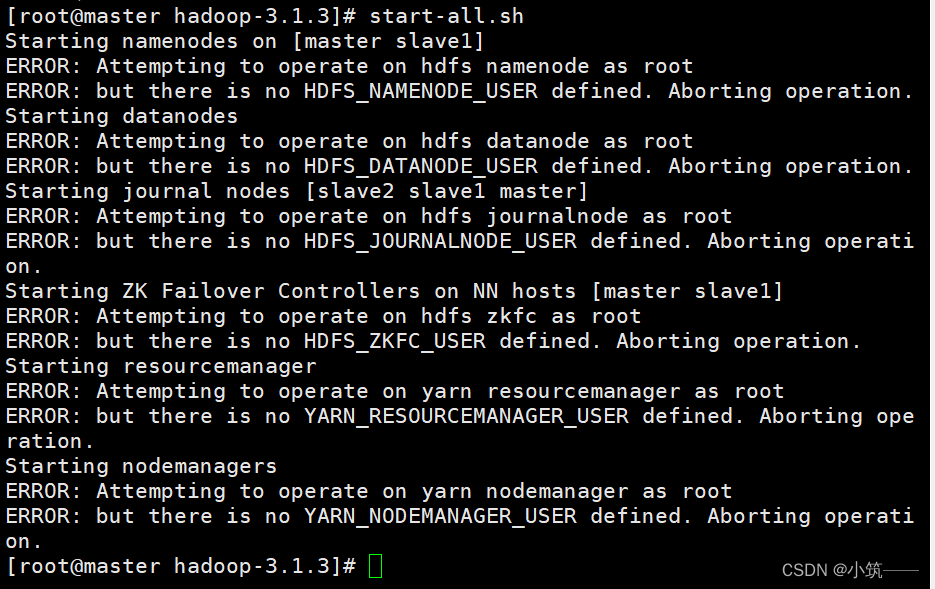

start-all.sh报错

hadoop-daemon.sh start namenode单独启动master上的namenode

hdfs namenode -bootstrapStandby再在另外你要起的虚拟机上同步namenode

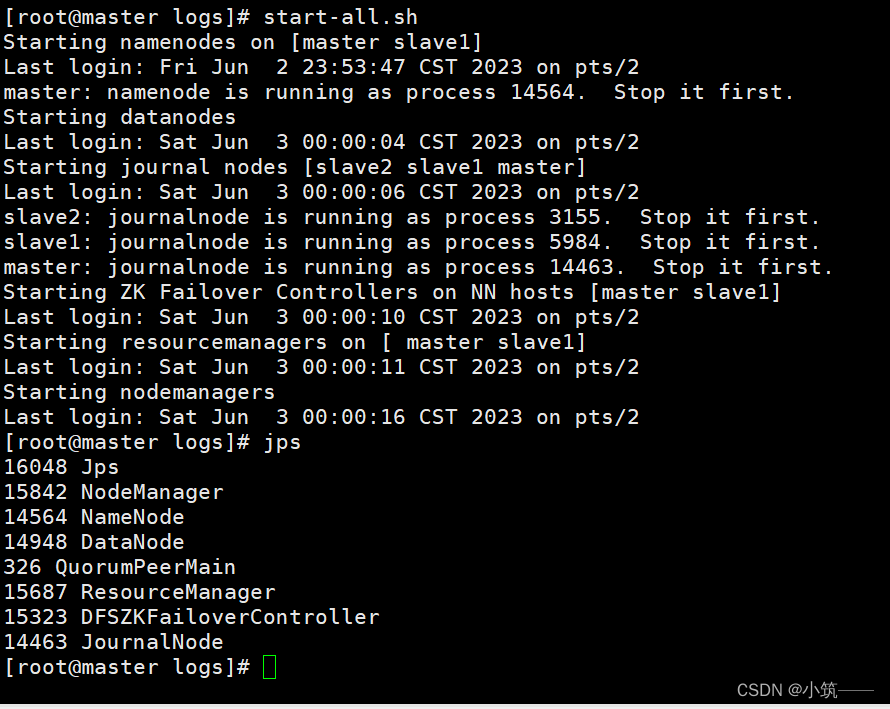

最后 start-all.sh

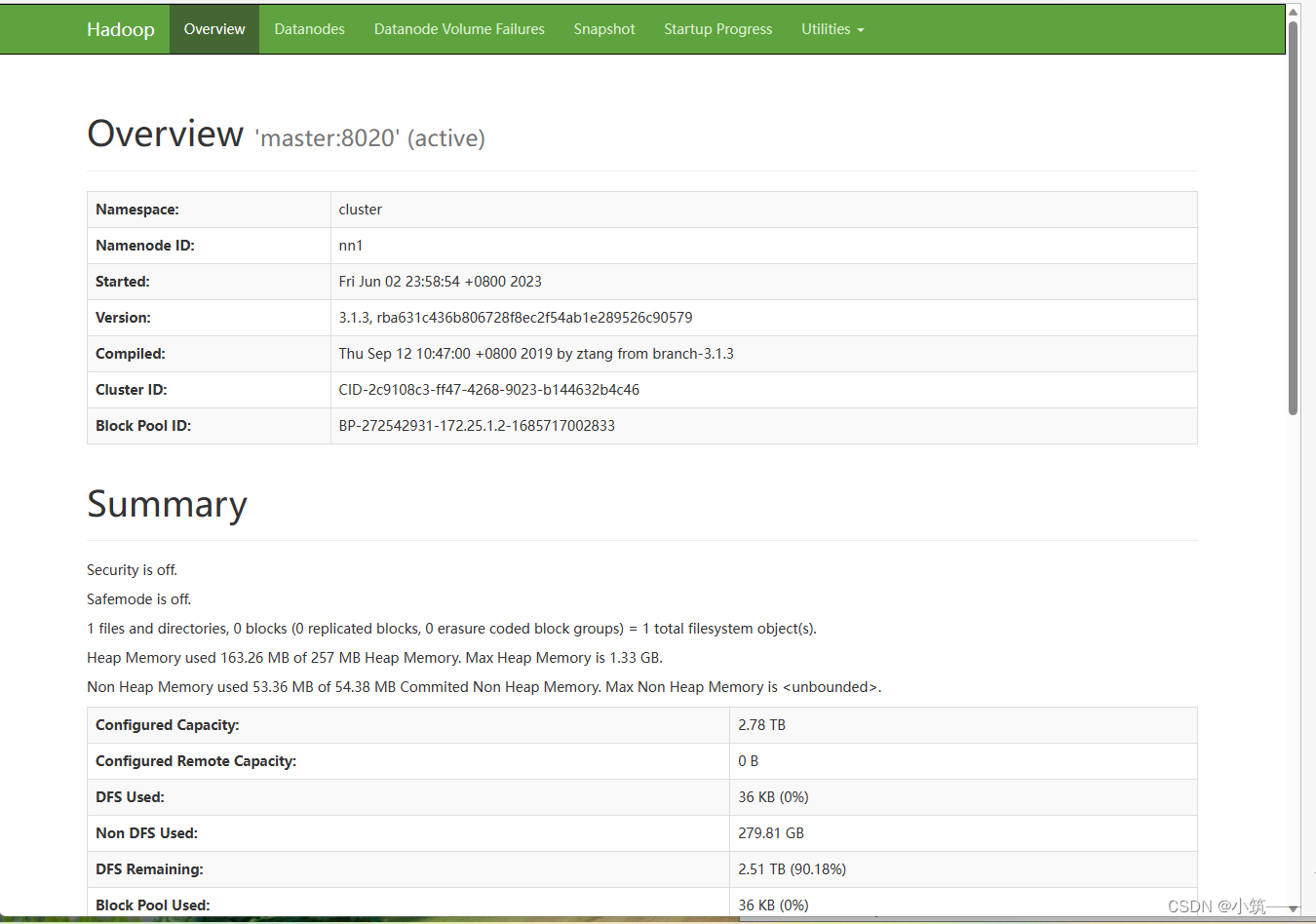

8.在Master节点上使用命令分别查看服务nn2与rm2进程状态

hdfs haadmin -getServiceState nn2

yarn rmadmin -getServiceState rm2

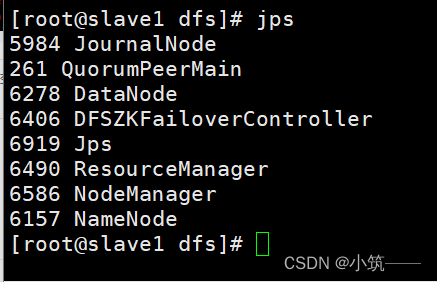

| 主机名 | IP地址 | 相关进程 |

| master | 根据自己的 | NameNode,DataNode, DFSZKFailoverController, QuorumPeerMain,JournalNode, ResourceManager,NodeMananger |

| slave1 | 根据自己的 | NameNode,DataNode, DFSZKFailoverController, QuorumPeerMain,JournalNode, ResourceManager,NodeMananger |

| slave2 | 根据自己的 | DataNode, NodeMananger, QuorumPeerMain, JournalNode |

1.前期准备

1.1.hadoop-3.1.3.tar.gz,jdk-8u212-linux-x64.tar.gz,apache-zookeeper-3.5.7-bin.tar.gz三个包提取码:k5y6

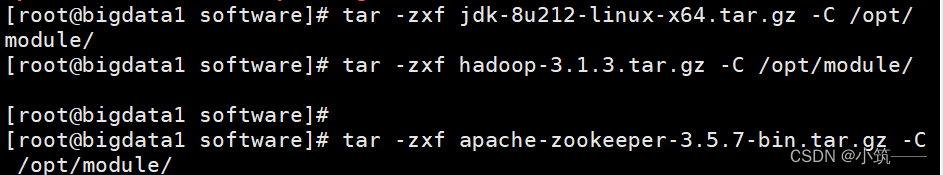

2.解压安装包,配置环境变量

tar -zxf tar包 -C 指定目录

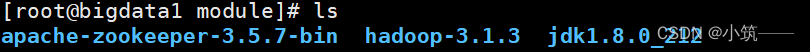

解压后

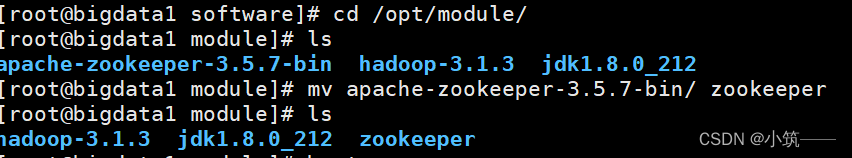

apache-zookeeper-3.5.7-bin名字好长不太习惯可以用mv改名

或者ln -s 软链接

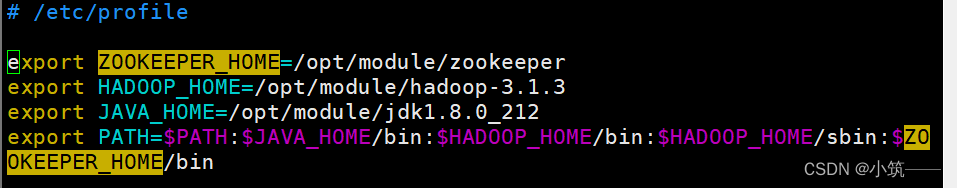

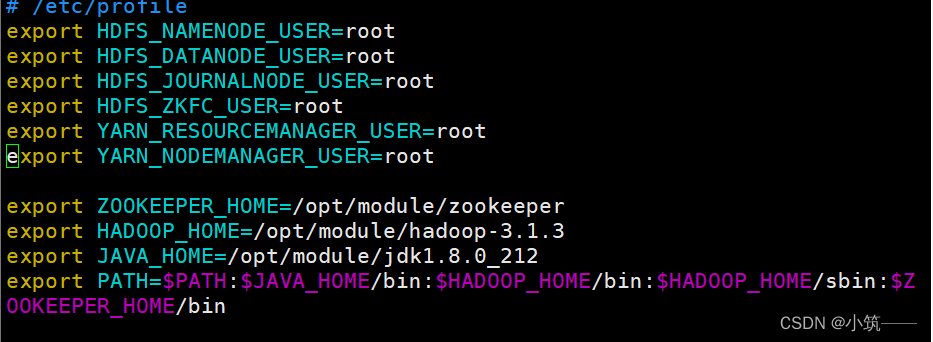

vim /etc/profile配置环境变量,source /etc/profile使环境变量生效

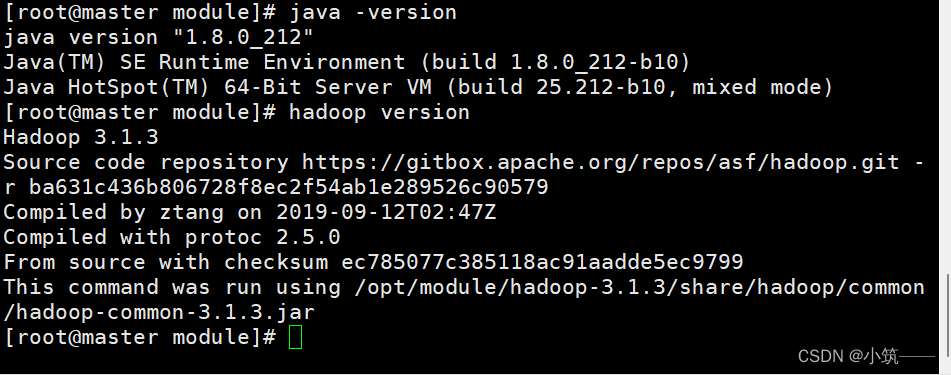

验证

hadoop version

java -version

3. 将三个节点分别命名为master、slave1、slave2并做免密登录

修改主机名,断开重连

hostnamectl set-hostname 主机名

免密在前面Hadoop完全分布式搭建说过,这里不再赘述

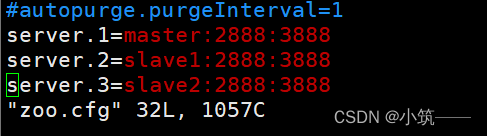

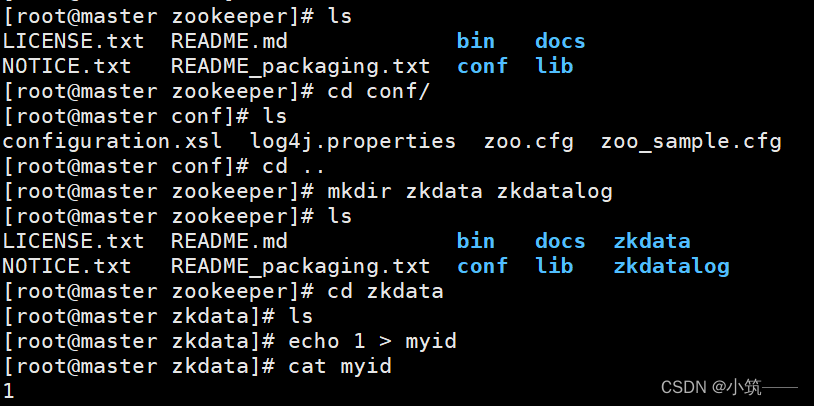

4.搭建zookeeper集群

cd /opt/module/zookeeper/conf

cp zoo_sample.cfg zoo.cfg

编辑zoo.cfg新增下列配置

根据配置的路径新建zkdata,zkdatalog目录。然后到zkdata目录中可以touch新建一个文件myid,也可以直接echo写入为1,另外slave1,salve2分别对应2,3。

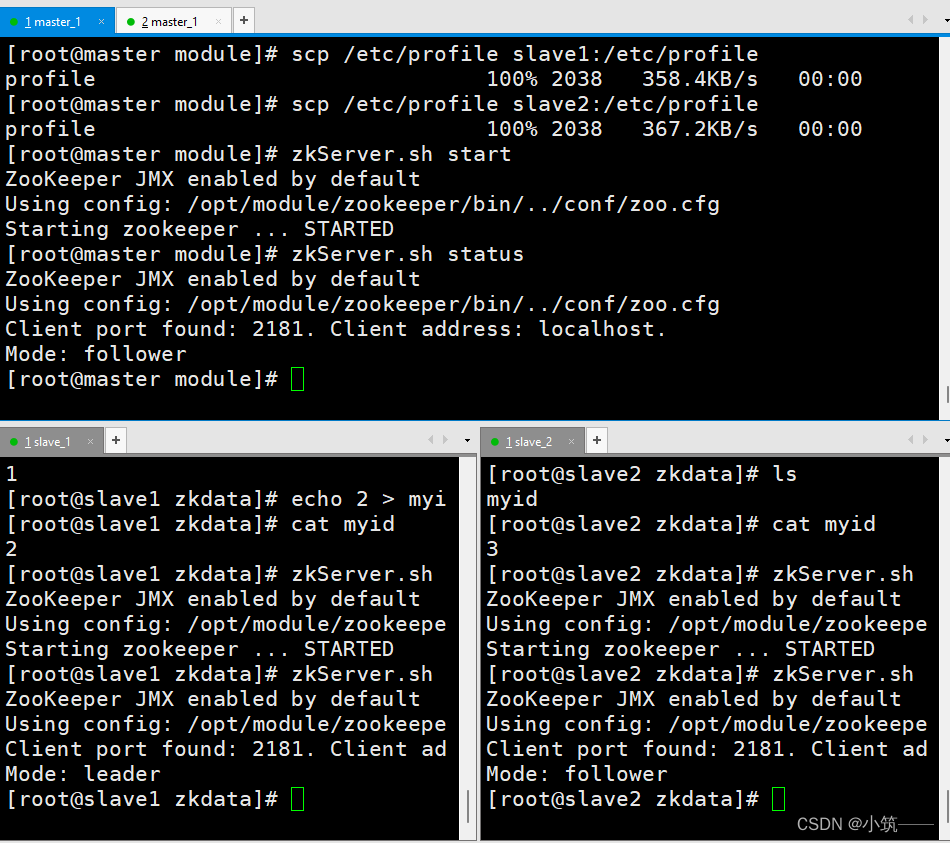

5.分发解压后的java,/etc/profile,zookeeper修改myid为2,3

scp -r /opt/module/jdk1.8.0_212/ slave1:/opt/module/

scp -r /opt/module/jdk1.8.0_212/ slave2:/opt/module/

scp /etc/profile slave1:/etc/profile

scp /etc/profile slave2:/etc/profile(不要忘记source)

scp -r /opt/module/zookeeper/ slave1:/opt/module/

scp -r /opt/module/zookeeper/ slave2:/opt/module/

6.启动zookeeper

zkServer.sh start

查看状态

zkServer.sh status

cd /opt/module/hadoop-3.1.3/etc/hadoop

vim core-site.xml

fs.defaultFS hdfs://cluster The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem. hadoop.tmp.dir /opt/module/hadoop-3.1.3/tmpdir A base for other temporary directories. ha.zookeeper.quorum master:2181,slave1:2181,slave2:2181 A list of ZooKeeper server addresses, separated by commas, that are to be used by the ZKFailoverController in automatic failover.

vim hdfs-site.xml

dfs.replication 3 Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. dfs.nameservices cluster Comma-separated list of nameservices. dfs.ha.namenodes.cluster nn1,nn2 The prefix for a given nameservice, contains a comma-separated list of namenodes for a given nameservice (eg EXAMPLENAMESERVICE). Unique identifiers for each NameNode in the nameservice, delimited by commas. This will be used by DataNodes to determine all the NameNodes in the cluster. For example, if you used Ἶ@~\myclusterἾ@~] as thh e nameservice ID previously, and you wanted to use Ἶ@~\nn1Ἶ@~] and Ἶ@~\nn22 Ἶ@@ ~] as the individual IDs of the NameNodes, you would configure a property dfs.ha.namenodes.mycluster, and its value "nn1,nn2". dfs.namenode.rpc-address.cluster.nn1 master:8020 A comma separated list of auxiliary ports for the NameNode to listen on. This allows exposing multiple NN addresses to clients. Particularly, it is used to enforce different SASL levels on different ports. Empty list indicates that auxiliary ports are disabled. dfs.namenode.rpc-address.cluster.nn2 slave1:8020 A comma separated list of auxiliary ports for the NameNode to listen on. This allows exposing multiple NN addresses to clients. Particularly, it is used to enforce different SASL levels on different ports. Empty list indicates that auxiliary ports are disabled. dfs.namenode.http-address.cluster.nn1 master:9870 The address and the base port where the dfs namenode web ui will listen on. dfs.namenode.http-address.cluster.nn2 slave1:9870 The address and the base port where the dfs namenode web ui will listen on. dfs.namenode.shared.edits.dir qjournal://master:8485;slave1:8485;slave2:8485/cluster A directory on shared storage between the multiple namenodes in an HA cluster. This directory will be written by the active and read by the standby in order to keep the namespaces synchronized. This directory does not need to be listed in dfs.namenode.edits.dir above. It should be left empty in a non-HA cluster. dfs.client.failover.proxy.provider.cluster org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider The prefix (plus a required nameservice ID) for the class name of the configured Failover proxy provider for the host. For more detailed information, please consult the "Configuration Details" section of the HDFS High Availability documentation. dfs.ha.automatic-failover.enabled true Whether automatic failover is enabled. See the HDFS High Availability documentation for details on automatic HA configuration. dfs.ha.fencing.methods shell(/bin/true) A list of scripts or Java classes which will be used to fence the Active NameNode during a failover. See the HDFS High Availability documentation for details on automatic HA configuration.

vim yarn-site.xml

A comma separated list of services where service name should only contain a-zA-Z0-9_ and can not start with numbers yarn.nodemanager.aux-services mapreduce_shuffle yarn.resourcemanager.ha.enabled true Name of the cluster. In a HA setting, this is used to ensure the RM participates in leader election for this cluster and ensures it does not affect other clusters yarn.resourcemanager.cluster-id yarn-cluster The list of RM nodes in the cluster when HA is enabled. See description of yarn.resourcemanager.ha .enabled for full details on how this is used. yarn.resourcemanager.ha.rm-ids rm1,rm2 The hostname of the RM. yarn.resourcemanager.hostname.rm1 master The hostname of the RM. yarn.resourcemanager.hostname.rm2 slave1 The http address of the RM web application. If only a host is provided as the value, the webapp will be served on a random port. yarn.resourcemanager.webapp.address.rm1 master:8088 The http address of the RM web application. If only a host is provided as the value, the webapp will be served on a random port. yarn.resourcemanager.webapp.address.rm2 slave1:8088 yarn.resourcemanager.zk-address master:2181,slave1:2181,slave2:2181

其余几个配置和前面Hadoop完全分布式一样

6.分发Hadoop

7.首次启动HDFS的HA模式,步骤如下

7.1.在虚拟机master上启动zookeeper集群

7.2.在虚拟机master上格式化zookeeper

hdfs zkfc -formatZK

7.3.分别在虚拟机master,slave1,slave2上启动journalnode进程

hadoop-daemon.sh start journalnode

7.4.然后格式化

hdfs namenode -format

7.5.

start-all.sh报错

添加进环境变量

hadoop-daemon.sh start namenode单独启动master上的namenode

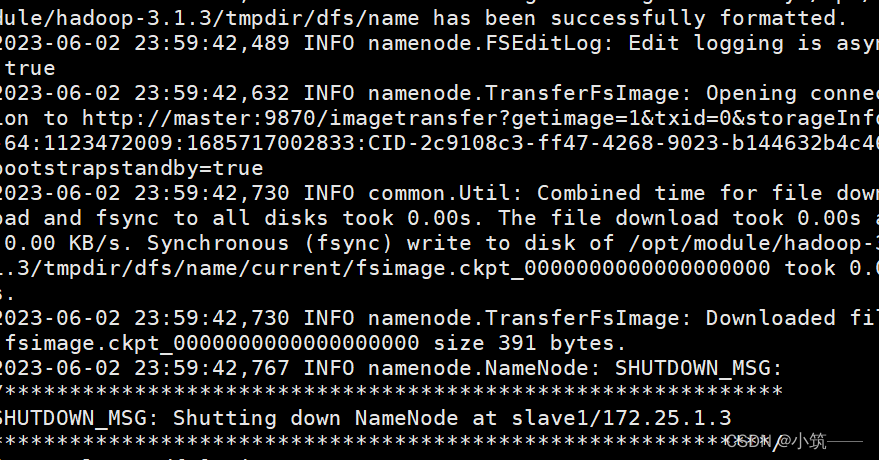

hdfs namenode -bootstrapStandby再在另外你要起的虚拟机上同步namenode

最后 start-all.sh

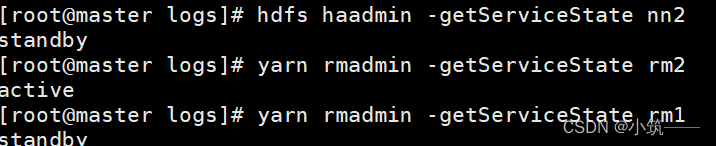

8.在Master节点上使用命令分别查看服务nn2与rm2进程状态

hdfs haadmin -getServiceState nn2

yarn rmadmin -getServiceState rm2

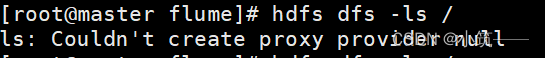

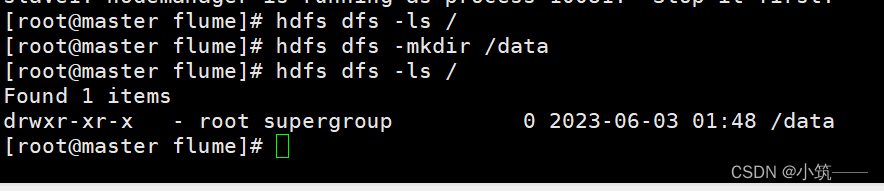

报错了:

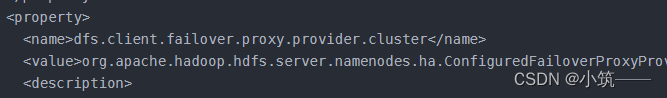

看看是否是hdfs-site.xml里面写错了,果然

namenode打成了namenodes,修改过来重启,成功了

猜你喜欢

网友评论

- 搜索

- 最新文章

- 热门文章